font-family: 'Helvetica' width: 1024 height: 768

A two day workshop in two hours (well, not quite)

Hilmar Lapp, Duke University

- Only 6 of 56 landmark oncology papers confirmed

- 43 of 67 drug target validation studies failed to reproduce

- Effect size overestimation is common

incremental: true

Lack of reproducibility in science causes significant issues

- For science as an enterprise

- For other researchers in the community

- For public policy

-

Science retracted (without lead author's consent) a study of how canvassers can sway people's opinions about gay marriage

-

Original survey data was not made available for independent reproduction of results (and survey incentives misrepresented, and sponsorship statement false)

-

Two Berkeley grad students attempted to replicate the study and discovered that the data must have been faked.

Lack of reproducibility in science causes significant issues

- For science as an enterprise

- For other researchers in the community

- For public policy

- For patients

From the authors of Low Dose Lidocaine for Refractory Seizures in Preterm Neonates (doi:10.1007/s12098-010-0331-7:

The article has been retracted at the request of the authors. After carefully re-examining the data presented in the article, they identified that data of two different hospitals got terribly mixed. The published results cannot be reproduced in accordance with scientific and clinical correctness.

Source: Retraction Watch

Lack of reproducibility in science causes significant issues

- For science as an enterprise

- For other researchers in the community

- For policy making

- For patients

- For oneself as a researcher

[](https://plus.google.com/+BrunoOliveira/posts/MGxauXypb1Y) Bruno Oliviera

* Research that is difficult to reproduce impedes your future self, and your lab. * More reproducible research is faster to report, in particular when research is dynamic. (Think data, tools, parameters, etc.) * More reproducible research is also faster to resume or to build on by others.

Reproducible science accelerates scientific progress.

- Dependency hell: Most software has recursive and differing dependencies. Any one can fail to install, conflict with those of others, and their exact versions can affect the results.

- Documentation gaps: Code can easily be very difficult to understand if not documented. Documentation gaps and errors may be harmless for experts, but are often fatal for "method novices".

- Unpredictable evolution: Scientific software evolves constantly and often in drastic rather than incremental ways. As a result, results, algorithms, and parameters can change in unpredictable ways, and can render code to fail after it worked only a short while months ago.

See an experiment on reproducing reproducible computational research

- Curriculum development hackathon held December 11-14, 2014, at NESCent

- 21 participants comprising statisticians, biologists, bioinformaticians, open-science activists, programmers, graduate students, postdocs, untenured and tenured faculty

- Outcome: open source, reusable curriculum for a two-day workshop on reproducibility for computational research

- Inaugural workshop May 14-15, 2015 at Duke in Durham, NC

- June 1-2, 2015 at iDigBio in Gainesville, FL

- September 24-25, 2015, at the Duke Marine Lab in Beaufort, NC

- Have made incremental improvements to curriculum and material in between workshops

Day 1

- Motivation of and introduction to Reproducible Research

- Best practices for file naming and file organization

- Best practices for tabular data

- Literate programming and executable documentation of data modification

Day 2

- Version control and Git

- Why automate?

- Transforming repetitive R script code into R functions

- Automated testing and integration testing

- Sharing, publishing, and archiving for data and code

type: titleonly

type: prompt

This is a two-part exercise:

Part 1: Analyze + document

Part 2: Swap + discuss

type: prompt

Complete the following tasks and write instructions / documentation for your

collaborator to reproduce your work starting with the original dataset

(data/gapminder-5060.csv).

-

Download material: http://bit.ly/cbb-retreat -> Releases -> Latest

-

Visualize life expectancy over time for Canada in the 1950s and 1960s using a line plot.

-

Something is clearly wrong with this plot! Turns out there's a data error in the data file: life expectancy for Canada in the year 1957 is coded as

999999, it should actually be69.96. Make this correction. -

Visualize life expectancy over time for Canada again, with the corrected data.

Stretch goal: Add lines for Mexico and United States.

type: prompt

Introduce yourself to your collaborator.

-

Swap instructions / documentation with your collaborator, and try to reproduce their work, first without talking to each oher. If your collaborator does not have the software they need to reproduce your work, we encourage you to either help them install it or walk them through it on your computer in a way that would emulate the experience. (Remember, this could be part of the irreproducibility problem!)

-

Then, talk to each other about challenges you faced (or didn't face) or why you were or weren't able to reproduce their work.

type: prompt

This exercise:

- What tools did you use (Excel, R / Python, Word / plain text etc.)?

- What made it easy / hard for reproducing your partners' work?

In a lab setting:

- What would happen if your collaborator is no longer available to walk you through their analysis?

- What would have to happen if you

- had to swap out the dataset or extend the analysis?

- caught further errors and had to re-create the analysis?

- you had to revert back to the original dataset?

type: titleonly

-

Documentation: difference between binary files (e.g. docx) and text files and why text files are preferred for documentation

- Protip: Use markdown to document your workflow so that anyone can pick up your data and follow what you are doing

- Protip: Use literate programming so that your analysis and your results are tightly connected, or better yet, unseperable

-

Organization: tools to organize your projects so that you don't have a single folder with hundreds of files

-

Automation: the power of scripting to create automated data analyses

-

Dissemination: publishing is not the end of your analysis, rather it is a way station towards your future research and the future research of others

type: titleonly

Provenance with results pasted into manuscript:

- Which code?

- Which data?

- Which context?

vs. Provenance of figures with Rmarkdown reports:

type: titleonly

Life expectancy shouldn't exceed even the most extreme age observed for humans.

if (any(gap_5060$lifeExp > 150)) {

stop("improbably high life expectancies")

}Error in eval(expr, envir, enclos): improbably high life expectancies

The library `testthat` allows us to make this a little more readable:

library(testthat)

expect_that(any(gap_5060$lifeExp > 150), is_false(),

"improbably high life expectancies")Error: any(gap_5060$lifeExp > 150) isn't false

improbably high life expectancies

type: titleonly

- There are going to be files. Lots of files.

- They will change over time.

- They will have differing relationships to each other.

File organization and naming are effective weapons against chaos.

- Machine readable

- easy to search for files later

- easy to narrow file lists based on names

- easy to extract info from file names (regexp-friendly)

- Human readable

- name contains information on content, or

- name contains semantics (e.g., place in workflow)

- Plays well with default ordering

- use numeric prefix to induce logic order

- left pad numbers with zeros

- use ISO 8601 standard (YYYY-mm-dd) for dates

type: prompt

Your data files contain readings from a well plate, one file per well,

using a specific assay run on a certain date, after a certain treatment.

- Devise a naming scheme for the files that is both "machine" and "human" readable.

$ ls *Plsmd*

2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_A01.csv

2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_A02.csv

2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_A03.csv

2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_B01.csv

2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_B02.csv

...

2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_H03.csv> list.files(pattern = "Plsmd") %>% head

[1] 2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_A01.csv

[2] 2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_A02.csv

[3] 2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_A03.csv

[4] 2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_B01.csv

[5] 2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_B02.csv

[6] 2013-06-26_BRAFASSAY_Plsmd-CL56-1MutFrac_B03.csvmeta <- stringr::str_split_fixed(flist, "[_\\.]", 5)

colnames(meta) <-

c("date", "assay", "experiment", "well", "ext")

meta[,1:4] date assay experiment well

[1,] "2013-06-26" "BRAFASSAY" "Plsmd-CL56-1MutFrac" "A01"

[2,] "2013-06-26" "BRAFASSAY" "Plsmd-CL56-1MutFrac" "A02"

[3,] "2013-06-26" "BRAFASSAY" "Plsmd-CL56-1MutFrac" "A03"

[4,] "2013-06-26" "BRAFASSAY" "Plsmd-CL56-1MutFrac" "B01"

[5,] "2013-06-26" "BRAFASSAY" "Plsmd-CL56-1MutFrac" "B02"

[6,] "2013-06-26" "BRAFASSAY" "Plsmd-CL56-1MutFrac" "B03"

type: titleonly

class: centered-image

Noble, William Stafford. 2009. “A Quick Guide to Organizing Computational Biology Projects.” PLoS Computational Biology 5 (7): e1000424.

title: false

|

+-- data-raw/

| |

| +-- gapminder-5060.csv

| +-- gapminder-7080.csv.csv

| +-- ....

|

+-- data-output/

|

+-- fig/

|

+-- R/

| |

| +-- figures.R

| +-- data.R

| +-- utils.R

| +-- dependencies.R

|

+-- tests/

|

+-- manuscript.Rmd

+-- make.R

data-raw: the original data, you shouldn't edit or otherwise alter any of the files in this folder.data-output: intermediate datasets that will be generated by the analysis. - We write them to CSV files so we could share or archive them, for example if they take a long time (or expensive resources) to generate.fig: the folder where we can store the figures used in the manuscript.R: our R code (the functions)- Often easier to keep the prose separated from the code.

- If you have a lot of code (and/or manuscript is long), it's easier to navigate.

tests: the code to test that our functions are behaving properly and that all our data is included in the analysis.

type: titleonly

make_ms <- function() {

rmarkdown::render("manuscript.Rmd",

"html_document")

invisible(file.exists("manuscript.html"))

}

clean_ms <- function() {

res <- file.remove("manuscript.html")

invisible(res)

}

make_all <- function() {

make_data()

make_figures()

make_tests()

make_ms()

}

clean_all <- function() {

clean_data()

clean_figures()

clean_ms()

}testthat includes a function called test_dir that will run tests

included in files in a given directory. We can use it to run all the tests in

our tests/ folder.

test_dir("tests/")Let's turn it into a function, so we'll be able to add some additional

functionalities to it a little later. We are also going to save it at the root

of our working directory in the file called make.R:

## add this to make.R

make_tests <- function() {

test_dir("tests/")

}type: titleonly

incremental: true

- funding agency / journal requirement

- community expects it

- increased visibility / citation

left: 70%

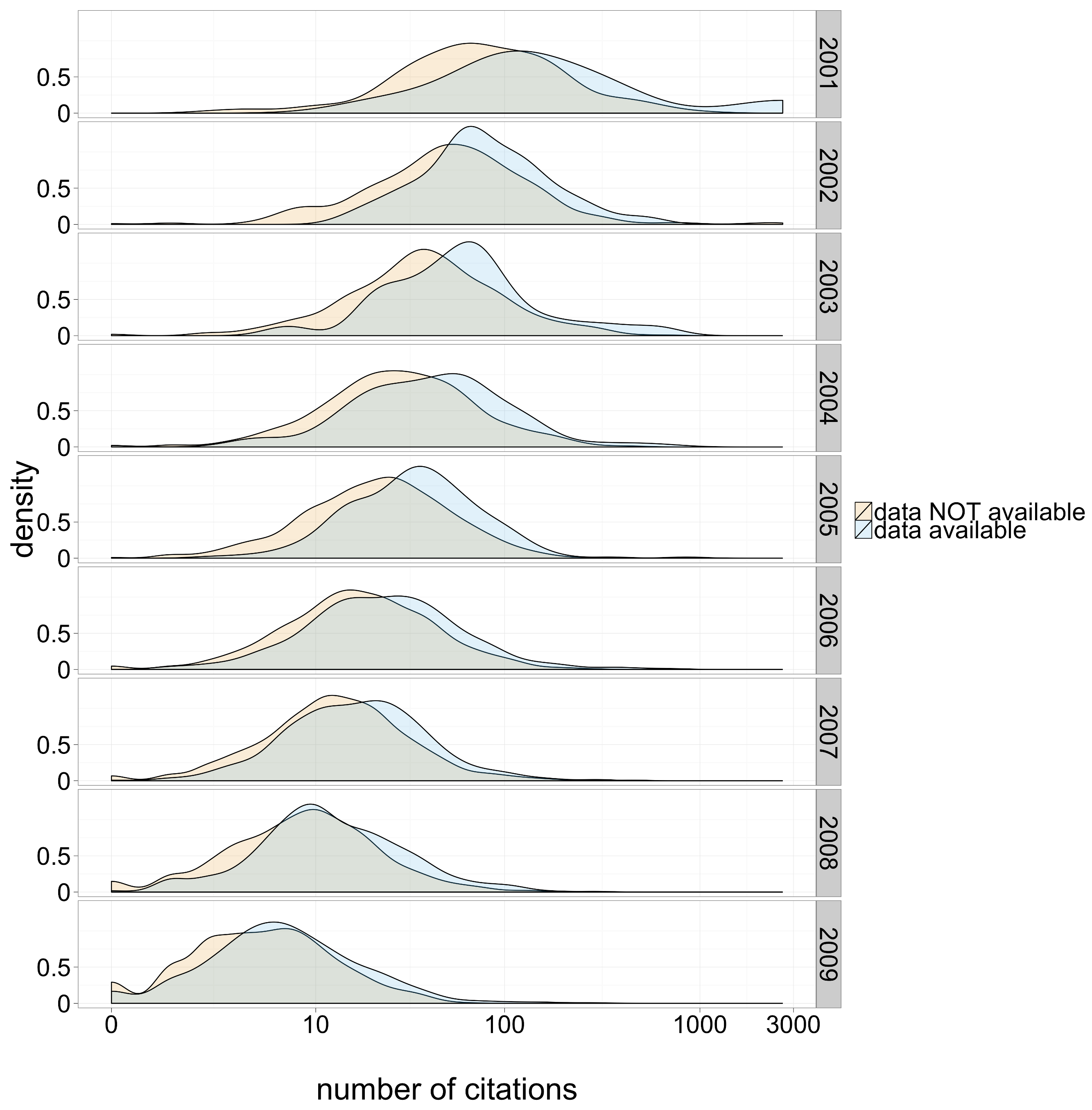

Piwowar & Vision (2013) "Data reuse and the open data citation advantage." PeerJ, e175

Figure 1: Citation density for papers with and without publicly available microarray data, by year of study publication.

- funding agency / journal requirement

- community expects it

- increased visibility / citation

- better research

left: 70%

Wicherts et al (2011) "Willingness to Share Research Data Is Related to the Strength of the Evidence and the Quality of Reporting of Statistical Results." PLoS ONE 6(11): e26828

Figure 1. Distribution of reporting errors per paper for papers from which data were shared and from which no data were shared.

- Domain-specific data repository (GenBank, PDB)

- Source code hosting service (GitHub, Bitbucket)

- Generic repository (Dryad, Figshare, Zenodo)

- Institutional repository

- Journal supplementary materials

- Domain-specific data repository (GenBank, PDB)

- Source code hosting service (GitHub, Bitbucket)

- Generic repository (Dryad, Figshare, Zenodo)

- Institutional repository

Journal supplementary materials

- Is there a domain specific repository?

- What are the backup & replication policies?

- Is there a plan for long-term preservation?

- Can people find your materials?

- Is it citable? (does it provide DOIs)

title: false

<iframe width="100%" height="700px" src="http://library.duke.edu/data/guides/data-management"></iframe>Do's

- non-proprietary file formats

- text file formats (.csv, .tsv, .txt)

Don't's

- proprietary file formats (.xls)

- data as PDFs or images

- data in Word documents

type: titleonly

left: 70%

Morin, Andrew, Jennifer Urban, and Piotr Sliz. 2012. “A Quick Guide to Software Licensing for the Scientist-Programmer.” PLoS Computational Biology 8 (7): e1002598.

type: titleonly

[](http://creativecommons.org/about/cc0)From the Panton Principles:

[In] the scholarly research community the act of citation is a commonly held community norm when reusing another community member’s work. [...] A well functioning community supports its members in their application of norms, whereas licences can only be enforced through court action and thus invite people to ignore them when they are confident that this is unlikely.

[](https://doi.org/10.1126/science.1213847) Peng, R. D. “[Reproducible Research in Computational Science](https://doi.org/10.1126/science.1213847)” Science 334, no. 6060 (2011): 1226–1227

incremental: true

- Reproducible practices can be applied after the fact, but it's much harder.

- And now you're doing this for others, rather than for your own benefits.

- And seriously, you won't ever publish the stuff you're working on?

- Adopting practices for reproducible science from the outset pays off in multiple ways.

- It's easy and little work while the project is still small and contains few files.

- Now all you're doing is reproducible. No painful considerations when it comes to sharing stuff.

- Your future self will reap the benefits.

- (Your lab does, too, but that's a side effect.)

- (Who should be leading this - you or your old-school advisor?)

- Organizing committee members and curriculum instructors

- Mine Çetinkaya-Rundel (Duke)

- Karen Cranston (Duke)

- Ciera Martinez (UC Davis)

- François Michonneau (iDigBio, U. Florida)

- Matt Pennell (U. Idaho)

- Tracy Teal (Data Carpentry)

- Dan Leehr (Duke)

- Inspiration: Sophie (Kershaw) Kay - Rotation Based Learning

- Funding and support:

- US National Science Foundation (NSF)

- National Evolutionary Synthesis Center (NESCent)

- Center for Genomic & Computational Biology (GCB), Duke University

This slideshow was generated as HTML from Markdown using RStudio.

The Markdown sources, and the HTML, are hosted on Github: https://github.com/Reproducible-Science-Curriculum/cbb-retreat

[](https://www.rstudio.com)

- Entire suppl. doc generated from Rmarkdown: Finnegan et al. 2015. “Paleontological Baselines for Evaluating Extinction Risk in the Modern Oceans.” Science 348 (6234): 567–70.

- Data Analysis for the Life Sciences - a book completely written in R markdown

- FitzJohn et al. 2014. “How Much of the World Is Woody?” The Journal of Ecology. doi:10.1111/1365-2745.12260. Start to end replicable analysis on Github.

- Boettiger et al. “RNeXML: A Package for Reading and Writing Richly Annotated Phylogenetic, Character, and Trait Data in R.” Methods in Ecology and Evolution, September. Code archive and DOI assignment at Zenodo