-

Notifications

You must be signed in to change notification settings - Fork 24

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

parallel training and param efficient #4

Comments

Data and Model Parallel

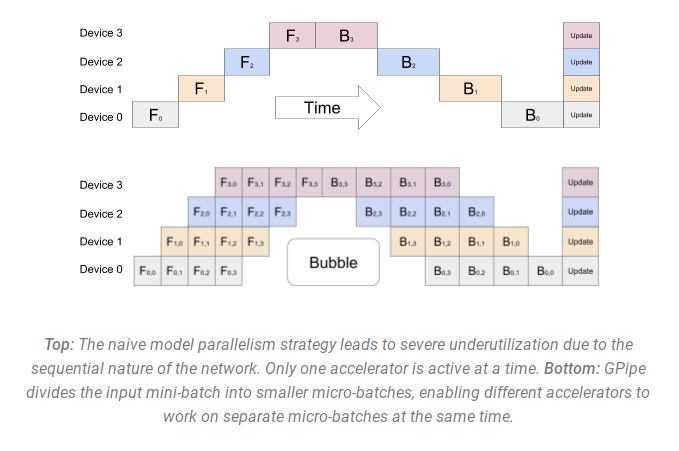

Start from heretorch-model-parallel-tutorial: speed up training by 50% using Pipeline Parallel, for it solves the GPU idling problem in Naive Model Parallel (Vertical). First, split model into 2 parts on 2 gpus. One gpu do its own part of work. One gpu wait the other gpu finish its batch. It contains lots of idle gpu time. This is called Naive Model Parallel (Vertical). Then, we split the whole batch in smaller batch-splits. GPU0 finish one small batch-split and then GPU1 start its task as well. Through split big batch into small, could reduce the whole ilde gpu time and raise the training speed. We call this upgrade as Pipeline Parallel. Model Parallel Reviewhuggingface-model-parallelism | new, In the modern machine learning the various approaches to parallelism are used to:

Concepts DataParallel (DP)Built-in feature of Pytorch(rank, torchrun): torch-parallel-training, torch-ddp-youtube DP vs DDP: https://huggingface.co/docs/transformers/v4.28.1/en/perf_train_gpu_many#dp-vs-ddp

Sync required. Naive Model Parallel (Vertical) and Pipeline ParallelLike tutorial before, when model is too big to fit in one GPU. It's easy to slice it in several parts vertically. Problems:

Pipeline Parallel (PP) is almost identical to a naive MP, but it solves the GPU idling problem, by chunking the incoming batch into micro-batches and artificially creating a pipeline, which allows different GPUs to concurrently participate in the computation process. PP introduces a new hyper-parameter to tune and it’s chunks which defines how many chunks of data are sent in a sequence through the same pipe stage. (Pytorch uses chunks, whereas DeepSpeed refers to the same hyper-parameter as GAS.) Because of the chunks, PP introduces the concept of micro-batches (MBS). DP splits the global data batch size into mini-batches, so if you have a DP degree of 4, a global batch size of 1024 gets split up into 4 mini-batches of 256 each (1024/4). And if the number of chunks (or GAS) is 32 we end up with a micro-batch size of 8 (256/32). Each Pipeline stage works with a single micro-batch at a time. Problems with traditional Pipeline API solutions:

Support in Pytorch, FairScale(FSDP), DeepSpeed, .etc DeepSpeed, Varuna and SageMaker use the concept of an Interleaved Pipeline Tensor Parallel (TP)In Tensor Parallelism each GPU processes only a slice of a tensor and only aggregates the full tensor for operations that require the whole thing. The main building block of any transformer is a fully connected. If we look at the computation in matrix form, it’s easy to see how the matrix multiplication can be split between multiple GPUs: Using this principle, we can update an MLP of arbitrary depth, without the need for any synchronization between GPUs until the very end, where we need to reconstruct the output vector from shards. Parallelizing the multi-headed attention layers is even simpler, since they are already inherently parallel, due to having multiple independent heads! Special considerations:

DeepSpeed calls it tensor slicing Zero Redundancy Optimizer (ZeRO) Data ParallelZeRO-powered data parallelism (ZeRO-DP) is described on the following diagram from this blog post. For simple talk, ZeRO is just the usual DataParallel (DP), except, instead of replicating the full model params, gradients and optimizer states, each GPU stores only a slice of it. And then at run-time when the full layer params are needed just for the given layer, all GPUs synchronize to give each other parts that they miss - this is it. More detail in zhihu. DeepSpeed, FairScale(FSDP) support ZeRO-DP stages 1+2+3. ZeRO-OffloadOffload is a strategy to complement GPU VRAM with CPU RAM for its cheaper. We want minimal GPU VRAM cost with efficient communication strategy. In offload, FWD/BWD works in GPU VRAM for its big computation. Param update/float2half/optimizer state works in CPU RAM for its smaller computation and big memory cost. Others like activation, buffer, fragment can be reduced using checkpointing. Problem:

PyTorch Fully Sharded Data Parallel (FSDP)

|

Parameter efficient[LoRA Low Rank Adaptation of Large Language Models, 2021/06, MSFT]

PEFThttps://github.com/huggingface/peft Accelerate: https://huggingface.co/docs/accelerate/main/en/package_reference/cli#accelerate-launch also refer to issue 3 Gradient Checkpointinghttps://qywu.github.io/2019/05/22/explore-gradient-checkpointing.html https://openai.com/research/sparse-transformer fp16 - mixed precisionhttps://pytorch.org/docs/stable/notes/amp_examples.html QuantizationInt8 - bitsandbytes / triton

int4 - gptq / ggml |

Typology of Efficient Training

Data & Model Parallel

Param Efficient

The text was updated successfully, but these errors were encountered: