❗ IMPORTANT ❗

dali_backend is new and rapidly growing. Official tritonserver releases might be behind

on some features and bug fixes. We encourage you to use the latest version of dali_backend.

Docker build section explains, how to build a tritonserver docker

image with main branch of dali_backend and DALI nightly release. This is a way to

get daily updates!

This repository contains code for DALI Backend for Triton Inference Server.

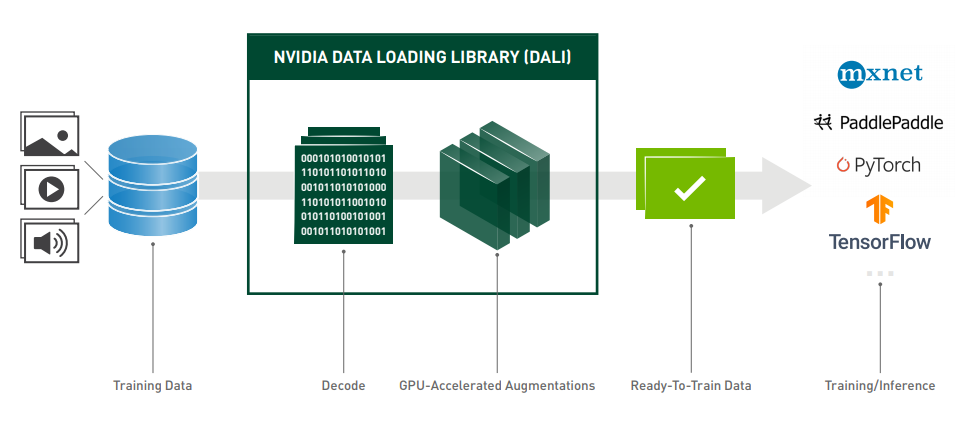

NVIDIA DALI (R), the Data Loading Library, is a collection of highly optimized building blocks, and an execution engine, to accelerate the pre-processing of the input data for deep learning applications. DALI provides both the performance and the flexibility to accelerate different data pipelines as one library. This library can then be easily integrated into different deep learning training and inference applications, regardless of used deep learning framework.

To find out more about DALI please refer to our main page. Getting started and Tutorials will guide you through your first steps and Supported operations will help you put together GPU-powered data processing pipelines.

Feel free to post an issue here or in DALI's github repository.

-

DALI data pipeline is expressed within Triton as a Model. To create such Model, you have to put together a DALI Pipeline in Python, and call the Pipeline.serialize method to generate a Model file. As an example, we'll use simple resizing pipeline:

import nvidia.dali as dali pipe = dali.pipeline.Pipeline(batch_size=256, num_threads=4, device_id=0) with pipe: images = dali.fn.external_source(device="cpu", name="DALI_INPUT_0") images = dali.fn.image_decoder(images, device="mixed") images = dali.fn.resize(images, resize_x=224, resize_y=224) pipe.set_outputs(images) pipe.serialize(filename="/my/model/repository/path/dali/1/model.dali") -

Model file shall be incorporated in Triton's Model Repository. Here's the example:

model_repository └── dali ├── 1 │ └── model.dali └── config.pbtxt -

As it's typical in Triton, your DALI Model file shall be named

model.dali. You can override this name in the model configuration, by settingdefault_model_filenameoption. Here's the wholeconfig.pbtxtwe use for theResizePipelineexample:name: "dali" backend: "dali" max_batch_size: 256 input [ { name: "DALI_INPUT_0" data_type: TYPE_UINT8 dims: [ -1 ] } ] output [ { name: "DALI_OUTPUT_0" data_type: TYPE_FP32 dims: [ 224, 224, 3 ] } ]

- There's a high chance, that you'll want to use the

ops.ExternalSourceoperator to feed the encoded images into DALI (or any other data for that matter). - Give your

ExternalSourceoperator the same name you give to the Input inconfig.pbtxt

- DALI's

ImageDecoderaccepts data only from the CPU - keep this in mind when putting together your DALI pipeline. - Triton accepts only homogeneous batch shape. Feel free to pad your batch of encoded images with zeros

- Due to DALI limitations, you might observe unnaturally increased memory consumption when

defining instance group for DALI model with higher

countthan 1. We suggest using default instance group for DALI model.

Building DALI Backend with docker is as simple as:

git clone --recursive https://github.com/triton-inference-server/dali_backend.git

cd dali_backend

docker build -t tritonserver:dali-latest .

And tritonserver:dali-latest becomes your new tritonserver docker image

To build dali_backend you'll need CMake 3.17+

On the event you'd need to use newer DALI version than it's provided in tritonserver image,

you can use DALI's nightly builds.

Just install whatever DALI version you like using pip (refer to the link for more info how to do it).

In this case, while building dali_backend, you'd need to pass -D TRITON_SKIP_DALI_DOWNLOAD=ON

option to your CMake build. dali_backend will find the latest DALI installed in your system and

use this particular version.

Building DALI Backend is really straightforward. One thing to remember is to clone

dali_backend repository with all the submodules:

git clone --recursive https://github.com/triton-inference-server/dali_backend.git

cd dali_backend

mkdir build

cd build

cmake ..

make

The building process will generate unittest executable.

You can use it to run unit tests for DALI Backend