forked from apache/spark

-

Notifications

You must be signed in to change notification settings - Fork 3

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

[SPARK-29052][DOCS][ML][PYTHON][CORE][R][SQL][SS] Create a Migration …

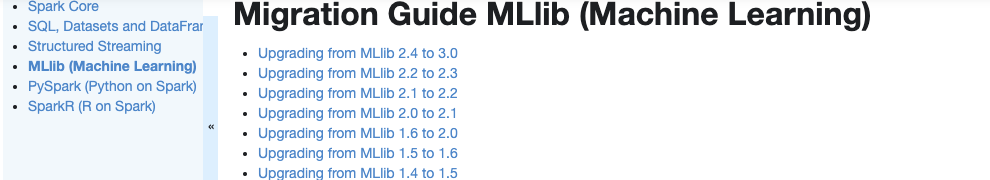

…Guide tap in Spark documentation ### What changes were proposed in this pull request? Currently, there is no migration section for PySpark, SparkCore and Structured Streaming. It is difficult for users to know what to do when they upgrade. This PR proposes to create create a "Migration Guide" tap at Spark documentation.   This page will contain migration guides for Spark SQL, PySpark, SparkR, MLlib, Structured Streaming and Core. Basically it is a refactoring. There are some new information added, which I will leave a comment inlined for easier review. 1. **MLlib** Merge [ml-guide.html#migration-guide](https://spark.apache.org/docs/latest/ml-guide.html#migration-guide) and [ml-migration-guides.html](https://spark.apache.org/docs/latest/ml-migration-guides.html) ``` 'docs/ml-guide.md' ↓ Merge new/old migration guides 'docs/ml-migration-guide.md' ``` 2. **PySpark** Extract PySpark specific items from https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html ``` 'docs/sql-migration-guide-upgrade.md' ↓ Extract PySpark specific items 'docs/pyspark-migration-guide.md' ``` 3. **SparkR** Move [sparkr.html#migration-guide](https://spark.apache.org/docs/latest/sparkr.html#migration-guide) into a separate file, and extract from [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html) ``` 'docs/sparkr.md' 'docs/sql-migration-guide-upgrade.md' Move migration guide section ↘ ↙ Extract SparkR specific items docs/sparkr-migration-guide.md ``` 4. **Core** Newly created at `'docs/core-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note. 5. **Structured Streaming** Newly created at `'docs/ss-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note. 6. **SQL** Merged [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html) and [sql-migration-guide-hive-compatibility.html](https://spark.apache.org/docs/latest/sql-migration-guide-hive-compatibility.html) ``` 'docs/sql-migration-guide-hive-compatibility.md' 'docs/sql-migration-guide-upgrade.md' Move Hive compatibility section ↘ ↙ Left over after filtering PySpark and SparkR items 'docs/sql-migration-guide.md' ``` ### Why are the changes needed? In order for users in production to effectively migrate to higher versions, and detect behaviour or breaking changes before upgrading and/or migrating. ### Does this PR introduce any user-facing change? Yes, this changes Spark's documentation at https://spark.apache.org/docs/latest/index.html. ### How was this patch tested? Manually build the doc. This can be verified as below: ```bash cd docs SKIP_API=1 jekyll build open _site/index.html ``` Closes apache#25757 from HyukjinKwon/migration-doc. Authored-by: HyukjinKwon <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

- Loading branch information

1 parent

b91648c

commit 7d4eb38

Showing

17 changed files

with

1,295 additions

and

1,157 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,12 @@ | ||

| - text: Spark Core | ||

| url: core-migration-guide.html | ||

| - text: SQL, Datasets and DataFrame | ||

| url: sql-migration-guide.html | ||

| - text: Structured Streaming | ||

| url: ss-migration-guide.html | ||

| - text: MLlib (Machine Learning) | ||

| url: ml-migration-guide.html | ||

| - text: PySpark (Python on Spark) | ||

| url: pyspark-migration-guide.html | ||

| - text: SparkR (R on Spark) | ||

| url: sparkr-migration-guide.html |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,6 @@ | ||

| <div class="left-menu-wrapper"> | ||

| <div class="left-menu"> | ||

| <h3><a href="migration-guide.html">Migration Guide</a></h3> | ||

| {% include nav-left.html nav=include.nav-migration %} | ||

| </div> | ||

| </div> |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,32 @@ | ||

| --- | ||

| layout: global | ||

| title: "Migration Guide: Spark Core" | ||

| displayTitle: "Migration Guide: Spark Core" | ||

| license: | | ||

| Licensed to the Apache Software Foundation (ASF) under one or more | ||

| contributor license agreements. See the NOTICE file distributed with | ||

| this work for additional information regarding copyright ownership. | ||

| The ASF licenses this file to You under the Apache License, Version 2.0 | ||

| (the "License"); you may not use this file except in compliance with | ||

| the License. You may obtain a copy of the License at | ||

| http://www.apache.org/licenses/LICENSE-2.0 | ||

| Unless required by applicable law or agreed to in writing, software | ||

| distributed under the License is distributed on an "AS IS" BASIS, | ||

| WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | ||

| See the License for the specific language governing permissions and | ||

| limitations under the License. | ||

| --- | ||

|

|

||

| * Table of contents | ||

| {:toc} | ||

|

|

||

| ## Upgrading from Core 2.4 to 3.0 | ||

|

|

||

| - In Spark 3.0, deprecated method `TaskContext.isRunningLocally` has been removed. Local execution was removed and it always has returned `false`. | ||

|

|

||

| - In Spark 3.0, deprecated method `shuffleBytesWritten`, `shuffleWriteTime` and `shuffleRecordsWritten` in `ShuffleWriteMetrics` have been removed. Instead, use `bytesWritten`, `writeTime ` and `recordsWritten` respectively. | ||

|

|

||

| - In Spark 3.0, deprecated method `AccumulableInfo.apply` have been removed because creating `AccumulableInfo` is disallowed. | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,30 @@ | ||

| --- | ||

| layout: global | ||

| title: Migration Guide | ||

| displayTitle: Migration Guide | ||

| license: | | ||

| Licensed to the Apache Software Foundation (ASF) under one or more | ||

| contributor license agreements. See the NOTICE file distributed with | ||

| this work for additional information regarding copyright ownership. | ||

| The ASF licenses this file to You under the Apache License, Version 2.0 | ||

| (the "License"); you may not use this file except in compliance with | ||

| the License. You may obtain a copy of the License at | ||

| http://www.apache.org/licenses/LICENSE-2.0 | ||

| Unless required by applicable law or agreed to in writing, software | ||

| distributed under the License is distributed on an "AS IS" BASIS, | ||

| WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | ||

| See the License for the specific language governing permissions and | ||

| limitations under the License. | ||

| --- | ||

|

|

||

| This page documents sections of the migration guide for each component in order | ||

| for users to migrate effectively. | ||

|

|

||

| * [Spark Core](core-migration-guide.html) | ||

| * [SQL, Datasets, and DataFrame](sql-migration-guide.html) | ||

| * [Structured Streaming](ss-migration-guide.html) | ||

| * [MLlib (Machine Learning)](ml-migration-guide.html) | ||

| * [PySpark (Python on Spark)](pyspark-migration-guide.html) | ||

| * [SparkR (R on Spark)](sparkr-migration-guide.html) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.