-

Notifications

You must be signed in to change notification settings - Fork 3

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Merge PR #9 | Update and create documentation using GitHub Pages

from kreshuklab/qy/create-docs

- Loading branch information

Showing

12 changed files

with

1,080 additions

and

173 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,57 @@ | ||

| name: Build and Deploy Documentation | ||

|

|

||

| on: | ||

| push: | ||

| branches: | ||

| - main | ||

| - qy/create-docs | ||

|

|

||

| permissions: | ||

| contents: write | ||

|

|

||

| jobs: | ||

| build-and-deploy: | ||

| runs-on: ubuntu-latest | ||

|

|

||

| steps: | ||

| # Checkout the repository | ||

| - name: Checkout Code | ||

| uses: actions/checkout@v4 | ||

|

|

||

| # Configure Git credentials | ||

| - name: Configure Git Credentials | ||

| run: | | ||

| git config user.name "github-actions[bot]" | ||

| git config user.email "41898282+github-actions[bot]@users.noreply.github.com" | ||

| # Set up Python | ||

| - name: Set up Python | ||

| uses: actions/setup-python@v5 | ||

| with: | ||

| python-version: "3.x" | ||

|

|

||

| # Generate cache ID | ||

| - name: Set Cache ID | ||

| run: echo "cache_id=$(date --utc '+%V')" >> $GITHUB_ENV | ||

|

|

||

| # Cache Python dependencies | ||

| - name: Cache Python Dependencies | ||

| uses: actions/cache@v4 | ||

| with: | ||

| key: mkdocs-material-${{ env.cache_id }} | ||

| path: .cache | ||

| restore-keys: | | ||

| mkdocs-material- | ||

| # Install MkDocs and Plugins, Deploy Documentation | ||

| - name: Install Dependencies and Deploy Docs | ||

| run: | | ||

| pip install mkdocs-material \ | ||

| mkdocs-git-revision-date-localized-plugin \ | ||

| mkdocs-git-committers-plugin-2 \ | ||

| mkdocs-autorefs \ | ||

| mkdocstrings[python] \ | ||

| markdown-exec | ||

| mkdocs gh-deploy --force | ||

| env: | ||

| MKDOCS_GIT_COMMITTERS_APIKEY: ${{ secrets.MKDOCS_GIT_COMMITTERS_APIKEY }} |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,107 +1,73 @@ | ||

| # Plant Nuclei Segmentation Pipelines <!-- omit in toc --> | ||

| # Nuclear Segmentation Pipelines <!-- omit in toc --> | ||

|

|

||

| This repository hosts the code and guides for the pipelines used in the paper [_A deep learning-based toolkit for 3D nuclei segmentation and quantitative analysis in cellular and tissue context_](https://www.biorxiv.org/content/10.1101/2024.02.19.580954v1). It is structured in to four folders: | ||

|  | ||

|

|

||

| The GoNuclear repository hosts the code and guides for the pipelines used in the paper [_A deep learning-based toolkit for 3D nuclei segmentation and quantitative analysis in cellular and tissue context_](https://doi.org/10.1242/dev.202800). It is structured in to four folders: | ||

|

|

||

| - **stardist/** contains a 3D StarDist training and inference pipeline, `run-stardist`. | ||

| - **plantseg/** contains configuration files for training and inference with PlantSeg. | ||

| - **cellpose/** contains scripts for training and inference with Cellpose. | ||

| - **evaluation/** contains modules for evaluating the segmentation results. | ||

|

|

||

| ## Table of Contents <!-- omit in toc --> | ||

|

|

||

| - [Tools and Workflows](#tools-and-workflows) | ||

| - [StarDist](#stardist) | ||

| - [PlantSeg](#plantseg) | ||

| - [Cellpose](#cellpose) | ||

| - [Data](#data) | ||

| - [Training Data](#training-data) | ||

| - [Preparing Data for Inference](#preparing-data-for-inference) | ||

| - [Cite](#cite) | ||

|

|

||

|

|

||

| ## Tools and Workflows | ||

|

|

||

| ### StarDist | ||

|

|

||

| *See [`run-stardist`'s README.md](stardist/README.md) for more details.* | ||

|

|

||

| This is one of the most important contribution of this repository. If your nuclei are more or less uniform in shape, please consider using the `run-stardist` pipeline in this repository. It generate separate and round instance segmentation masks for your nuclei images. | ||

|

|

||

| - The code and tutorial for running StarDist inference is in the `stardist/` folder | ||

| - The pretrained model is automatically downloaded during inference (also available at [BioImage.IO: StarDist Plant Nuclei 3D ResNet](https://bioimage.io/#/?id=10.5281%2Fzenodo.8421755)) | ||

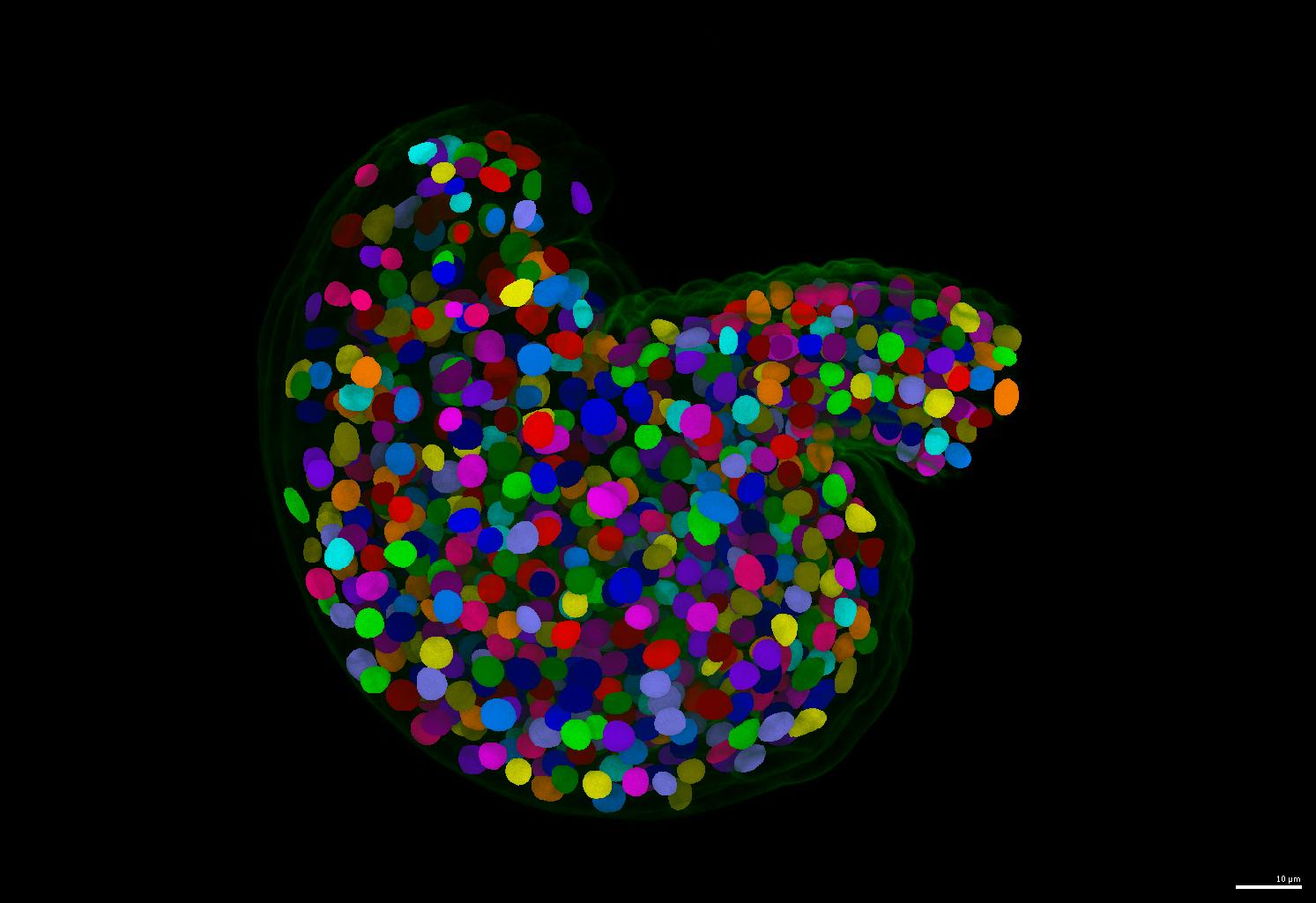

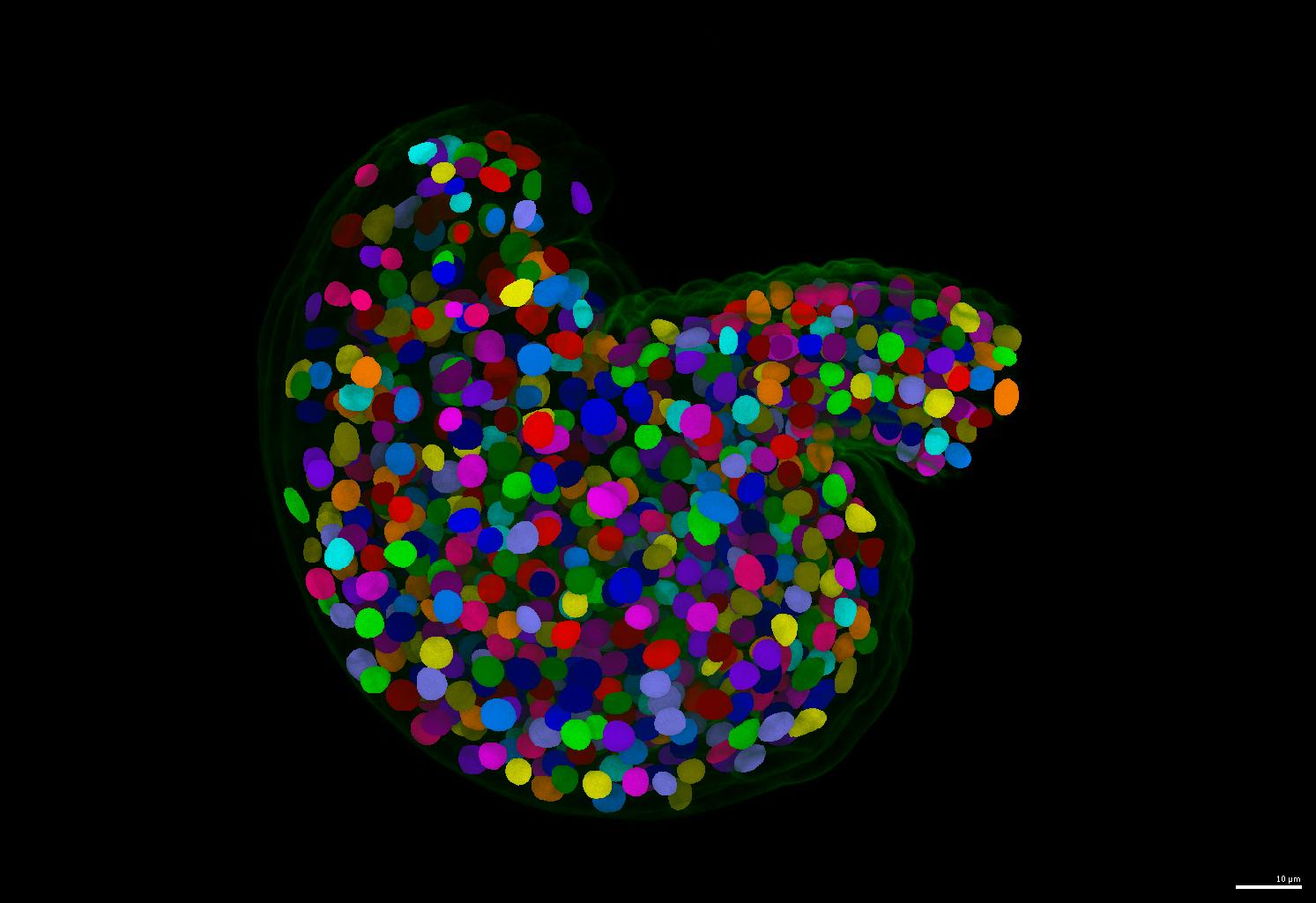

| - An example of segmentation results is shown below. | ||

|

|

||

|  | ||

|

|

||

| ### PlantSeg | ||

|

|

||

| *See [PlantSeg's README.md](plantseg/README.md) for more details.* | ||

|

|

||

| If your nuclei have irregular shapes, please consider using the PlantSeg pipeline. It generates instance masks for your nuclei images regardless of their nucleus size and shape. | ||

|

|

||

| - The code and tutorial for running PlantSeg inference is in the `plantseg/` folder | ||

| - The pretrained model is automatically downloaded during inference (also available at [BioImage.IO: PlantSeg Plant Nuclei 3D UNet](https://bioimage.io/#/?id=10.5281%2Fzenodo.8401064)) | ||

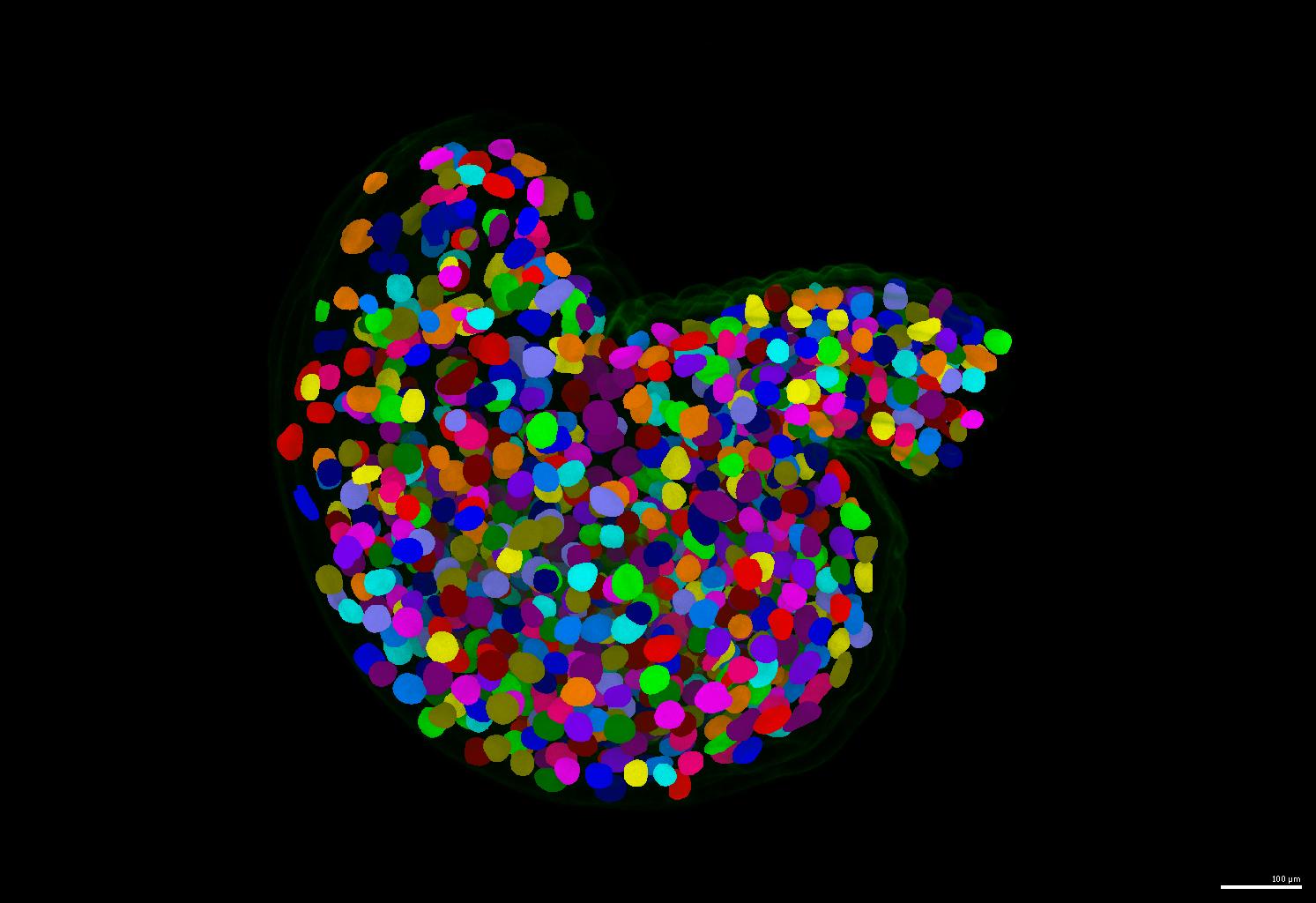

| - An example of segmentation results is shown below. | ||

|

|

||

|  | ||

|

|

||

| ### Cellpose | ||

|

|

||

| *See [Cellpose's README.md](cellpose/README.md) for more details.* | ||

|

|

||

| - The guide for running Cellpose inference and training is in the `cellpose/` folder | ||

|

|

||

| ## Data | ||

|

|

||

| ### Training Data | ||

|

|

||

| The training data is publicly available on [BioImage Archive](https://www.ebi.ac.uk/biostudies/BioImages/studies/S-BIAD1026). | ||

|

|

||

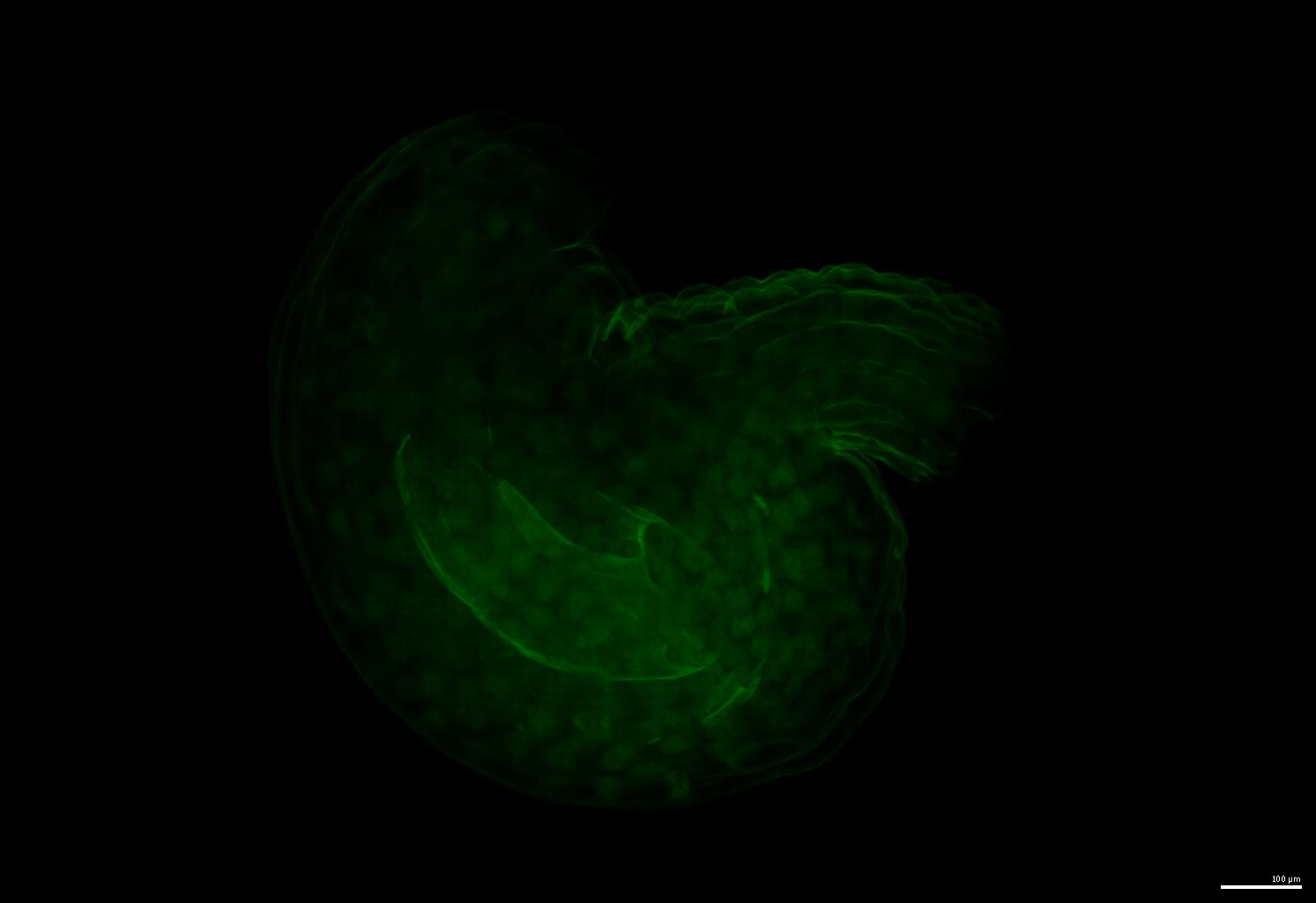

| An example of the raw image: | ||

|

|

||

|  | ||

|

|

||

| Some key information about the training data is listed below: | ||

|

|

||

| ```python | ||

| original_voxel_size = { # z, y, x | ||

| 1135: [0.28371836501901143, 0.12678642066720086, 0.12678642066720086], # validation | ||

| 1136: [0.2837183895131086, 0.12756971653115998, 0.12756971653115998], # training | ||

| 1137: [0.2837183895131086, 0.1266211463645486, 0.1266211463645486 ], # training | ||

| 1139: [0.2799036917562724, 0.12674335484590543, 0.12674335484590543], # training | ||

| 1170: [0.27799632231404964, 0.12698523961670266, 0.12698522349145364], # training | ||

| } # [0.2837, 0.1268, 0.1268] is taken as the median | ||

|

|

||

| original_median_extents = { # z, y, x | ||

| 1135: [16, 32, 33], # validation | ||

| 1136: [16, 32, 32], # training | ||

| 1137: [16, 32, 32], # training | ||

| 1139: [16, 32, 33], # training | ||

| 1170: [16, 29, 30], # training | ||

| 'average': | ||

| } # [16, 32, 32] is taken as the median | ||

| and are described in [**GoNuclear documentation** :book:](https://kreshuklab.github.io/go-nuclear/). | ||

|

|

||

| ## Data and Models | ||

|

|

||

| Please go to [BioImage Archive S-BIAD1026](https://www.ebi.ac.uk/biostudies/BioImages/studies/S-BIAD1026) for the training data and models. I organised them in the following structure: | ||

|

|

||

| ```bash | ||

| Training data | ||

| ├── 2d/ | ||

| │ ├── isotropic/ | ||

| │ │ ├── gold/ | ||

| │ │ └── initial/ | ||

| │ └── original/ | ||

| │ ├── gold/ | ||

| │ └── README.txt | ||

| └── 3d_all_in_one/ | ||

| ├── 1135.h5 | ||

| ├── 1136.h5 | ||

| ├── 1137.h5 | ||

| ├── 1139.h5 | ||

| └── 1170.h5 | ||

|

|

||

| Models | ||

| ├── cellpose/ | ||

| │ ├── cyto2_finetune/ | ||

| │ │ └── gold/ | ||

| │ ├── nuclei_finetune/ | ||

| │ │ ├── gold/ | ||

| │ │ └── initial/ | ||

| │ └── scratch_trained/ | ||

| │ └── gold/ | ||

| ├── plantseg/ | ||

| │ └── 3dunet/ | ||

| │ ├── gold/ | ||

| │ ├── initial/ | ||

| │ ├── platinum/ | ||

| │ └── train_example.yml | ||

| └── stardist/ | ||

| ├── resnet/ | ||

| │ ├── gold/ | ||

| │ ├── initial/ | ||

| │ └── platinum/ | ||

| ├── train_example.yml | ||

| └── unet/ | ||

| └── gold/ | ||

| ``` | ||

|

|

||

| **Note for training Cellpose:** The best image form for training StarDist and PlantSeg models are the original forms, i.e. the linked dataset is the one that provide the best results. However, to train Cellpose which only takes 2D training data, the images are prepared to be 2D slices of the rescaled isotropic 3D images. The 2D slices includes all XY, XZ and YZ slices ordered randomly by a random prefix in the file name. The 2D slices are saved as TIFF files. | ||

|

|

||

| ### Preparing Data for Inference | ||

|

|

||

| Both HDF5 files and TIFF files can be directly used for both `run-stardist` and `plant-seg` inference. Go to the respective folders's README.md for more details. | ||

|

|

||

| ## Cite | ||

| ## Citation | ||

|

|

||

| If you find this work useful, please cite our paper and the respective tools' papers: | ||

|

|

||

| ```bibtex | ||

| @article {Vijayan2024.02.19.580954, | ||

| author = {Athul Vijayan and Tejasvinee Atul Mody and Qin Yu and Adrian Wolny and Lorenzo Cerrone and Soeren Strauss and Miltos Tsiantis and Richard S. Smith and Fred Hamprecht and Anna Kreshuk and Kay Schneitz}, | ||

| title = {A deep learning-based toolkit for 3D nuclei segmentation and quantitative analysis in cellular and tissue context}, | ||

| elocation-id = {2024.02.19.580954}, | ||

| year = {2024}, | ||

| doi = {10.1101/2024.02.19.580954}, | ||

| publisher = {Cold Spring Harbor Laboratory}, | ||

| URL = {https://www.biorxiv.org/content/early/2024/02/21/2024.02.19.580954}, | ||

| eprint = {https://www.biorxiv.org/content/early/2024/02/21/2024.02.19.580954.full.pdf}, | ||

| journal = {bioRxiv} | ||

| @article{vijayan2024deep, | ||

| title={A deep learning-based toolkit for 3D nuclei segmentation and quantitative analysis in cellular and tissue context}, | ||

| author={Vijayan, Athul and Mody, Tejasvinee Atul and Yu, Qin and Wolny, Adrian and Cerrone, Lorenzo and Strauss, Soeren and Tsiantis, Miltos and Smith, Richard S and Hamprecht, Fred A and Kreshuk, Anna and others}, | ||

| journal={Development}, | ||

| volume={151}, | ||

| number={14}, | ||

| year={2024}, | ||

| publisher={The Company of Biologists} | ||

| } | ||

| ``` |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.